The world we live in is causal, yet many Artificial Intellgience systems and most Machine Learning systems ignore this reality for the sake of convenience. There is a growing interest in making progress in this important concept, and this space will highlight our research in that area.

Our Papers on Causality

[preprint] Implicit Causal Representation Learning via Switchable Mechanisms

Generative Causal Representation Learning for Out-of-Distribution Motion Forecasting

In

Proceedings of the 40th International Conference on Machine Learning (ICML).

PMLR,

Honolulu, Hawaii, USA.

Jul,

2023.

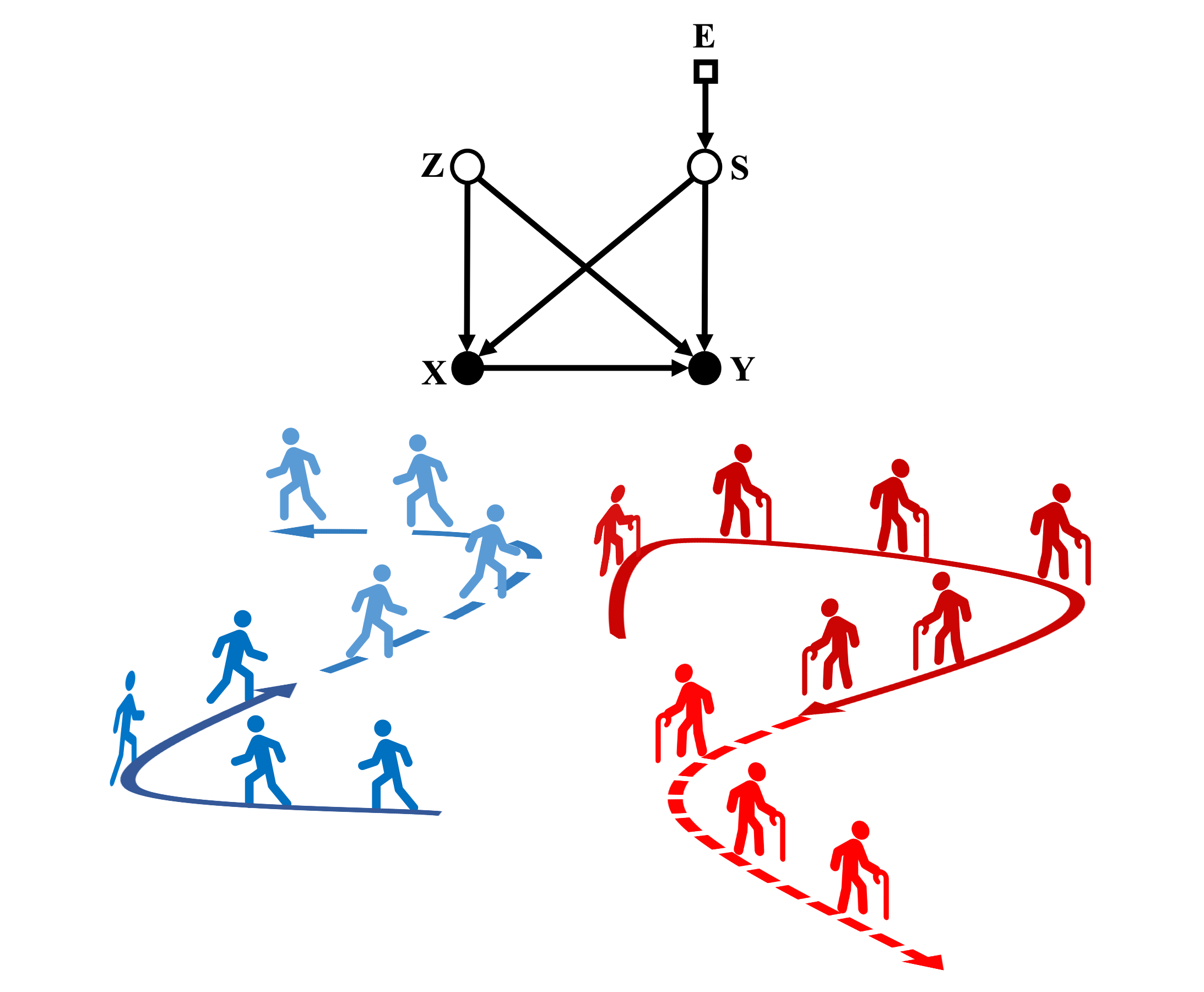

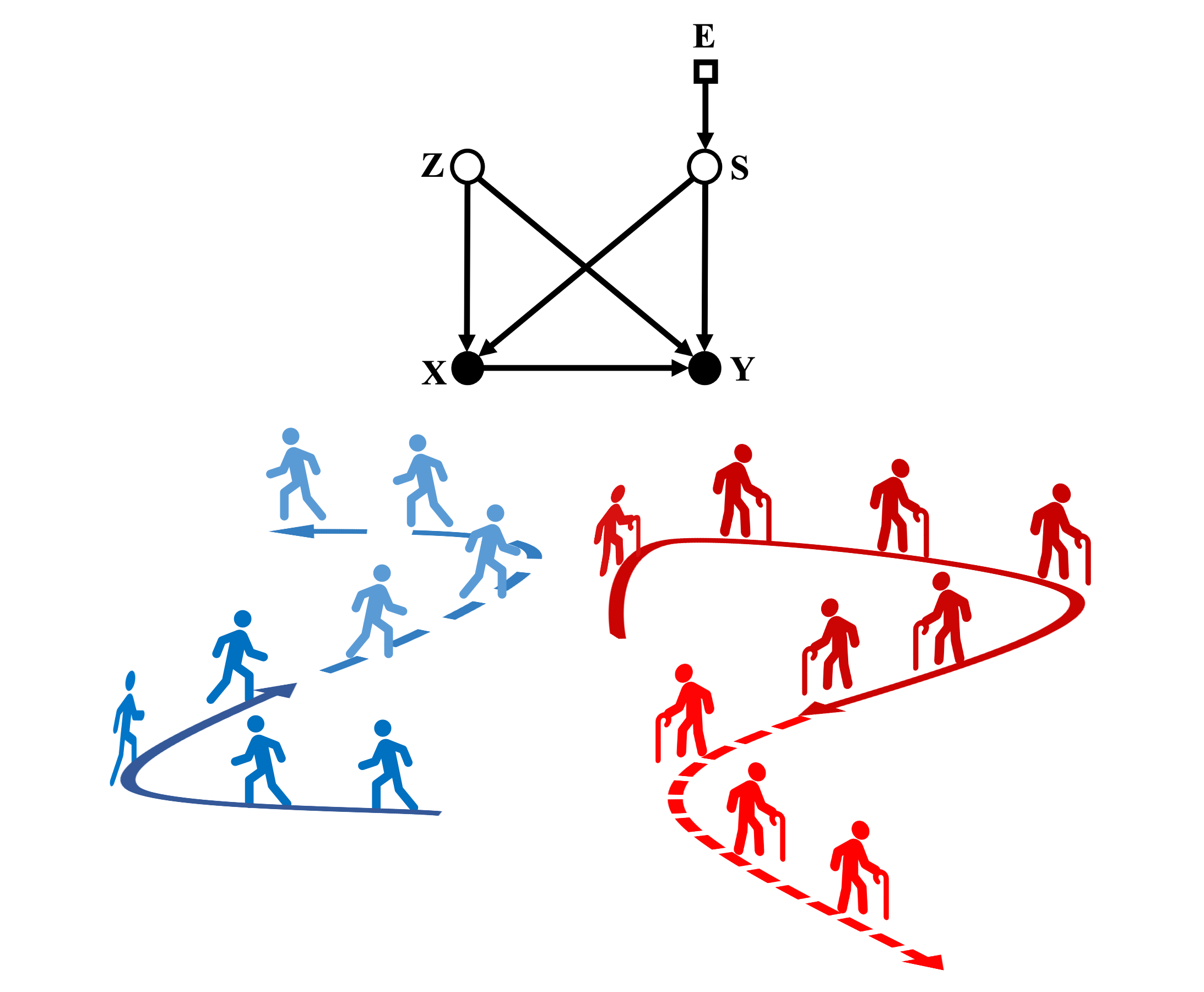

Conventional supervised learning methods typically assume i.i.d samples and are found to be sensitive to out-of-distribution (OOD) data. We propose Generative Causal Representation Learning (GCRL) which leverages causality to facilitate knowledge transfer under distribution shifts. While we evaluate the effectiveness of our proposed method in human trajectory prediction models, GCRL can be applied to other domains as well. First, we propose a novel causal model that explains the generative factors in motion forecasting datasets using features that are common across all environments and with features that are specific to each environment. Selection variables are used to determine which parts of the model can be directly transferred to a new environment without fine-tuning. Second, we propose an end-to-end variational learning paradigm to learn the causal mechanisms that generate observations from features. GCRL is supported by strong theoretical results that imply identifiability of the causal model under certain assumptions. Experimental results on synthetic and real-world motion forecasting datasets show the robustness and effectiveness of our proposed method for knowledge transfer under zero-shot and low-shot settings by substantially outperforming the prior motion forecasting models on out-of-distribution prediction.

Cyclic causal models with discrete variables: Markov chain equilibrium semantics and sample ordering

In

International Joint Conference on Artificial Intelligence (IJCAI).

Beijing, China.

2013.

We analyze the foundations of cyclic causal models for discrete variables, and compare structural equation models (SEMs) to an alternative semantics as the equilibrium (stationary) distribution of a Markov chain. We show under general conditions, discrete cyclic SEMs cannot have independent noise, even in the simplest case, cyclic structural equation models imply constraints on the noise. We give a formalization of an alternative Markov chain equilibrium semantics which requires not only the causal graph, but also a sample order. We show how the resulting equilibrium is a function of the sample ordering, both theoretically and empirically.