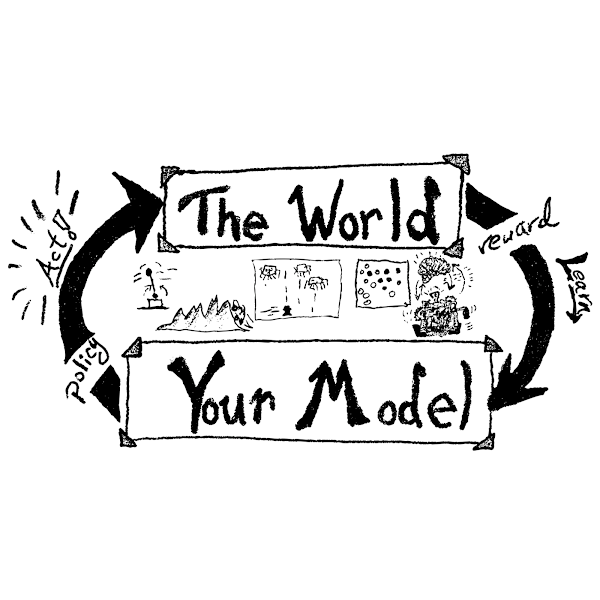

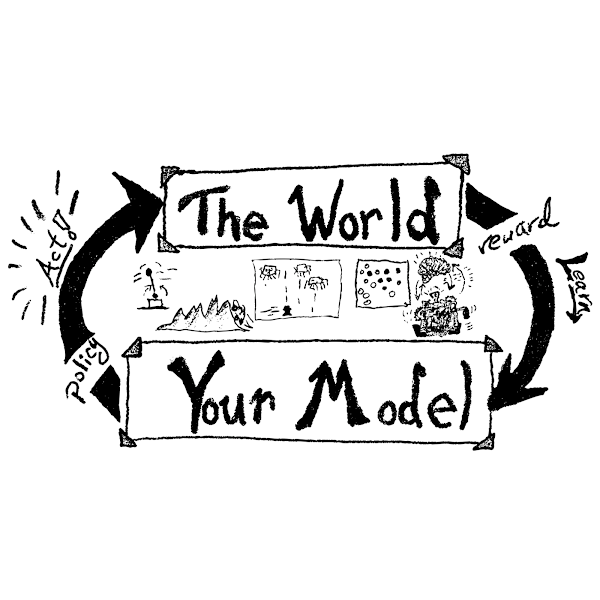

One of my core research areas is into understanding the computational mechanisms that can enable learning to perform complex tasks primarily from experience and feedback. This topic, called Reinforcement Learning (RL), has a complex history tying fields as diverse as neuroscience, behavioural and development psychology, economics and computer science. See my RL Reading List for the papers and texts I used to teach the field. I approach RL it as a computational researcher aiming to build Artificial Intelligence agents that learn to way Humans do, not by any correspondence of their “brain” and it “neural” structure by the algorithms they both use to learn to act in a complex, mysterious world.

Learning Resources from The Lab

External Resources

- Revised Textbook by Sutton and Barto - http://incompleteideas.net/book/the-book-2nd.html

- Martha White has a great RL Fundamentals Course

- Sergey Levine has a very detailed Deep RL Course

Our Papers on Reinforcement Learning

ChemGymRL: An Interactive Framework for Reinforcement Learning for Digital Chemistry

Chris Beeler,

Sriram Ganapathi Subramanian,

Kyle Sprague,

Nouha Chatti,

Colin Bellinger,

Mitchell Shahen,

Nicholas Paquin,

Mark Baula,

Amanuel Dawit,

Zihan Yang,

Xinkai Li,

Mark Crowley,

and Isaac Tamblyn.

Digital Discovery.

3,

Feb,

2024.

This paper provides a simulated laboratory for making use of reinforcement learning (RL) for material design, synthesis, and discovery. Since RL is fairly data intensive, training agents ‘on-the-fly’ by taking actions in the real world is infeasible and possibly dangerous. Moreover, chemical processing and discovery involves challenges which are not commonly found in RL benchmarks and therefore offer a rich space to work in. We introduce a set of highly customizable and open-source RL environments, ChemGymRL, implementing the standard gymnasium API. ChemGymRL supports a series of interconnected virtual chemical benches where RL agents can operate and train. The paper introduces and details each of these benches using well-known chemical reactions as illustrative examples, and trains a set of standard RL algorithms in each of these benches. Finally, discussion and comparison of the performances of several standard RL methods are provided in addition to a list of directions for future work as a vision for the further development and usage of ChemGymRL.

Learning from Multiple Independent Advisors in Multi-agent Reinforcement Learning

In

Proceedings of the 22nd International Conference on Autonomous Agents and MultiAgent Systems (AAMAS).

International Foundation for Autonomous Agents and Multiagent Systems (IFAAMAS),

London, United Kingdom.

Sep,

2023.

Multi-agent reinforcement learning typically suffers from the problem of sample inefficiency, where learning suitable policies involves the use of many data samples. Learning from external demonstrators is a possible solution that mitigates this problem. However, most prior approaches in this area assume the presence of a single demonstrator. Leveraging multiple knowledge sources (i.e., advisors) with expertise in distinct aspects of the environment could substantially speed up learning in complex environments. This paper considers the problem of simultaneously learning from multiple independent advisors in multi-agent reinforcement learning. The approach leverages a two-level Q-learning architecture, and extends this framework from single-agent to multi-agent settings. We provide principled algorithms that incorporate a set of advisors by both evaluating the advisors at each state and subsequently using the advisors to guide action selection. Also, we provide theoretical convergence and sample complexity guarantees. Experimentally, we validate our approach in three different test-beds and show that our algorithms give better performances than baselines, can effectively integrate the combined expertise of different advisors, and learn to ignore bad advice.

ChemGymRL: An Interactive Framework for Reinforcement Learning for Digital Chemistry

In

NeurIPS 2023 AI for Science Workshop.

New Orleans, USA.

Dec,

2023.

This paper provides a simulated laboratory for making use of Reinforcement Learning (RL) for chemical discovery. Since RL is fairly data intensive, training agents ‘on-the-fly’ by taking actions in the real world is infeasible and possibly dangerous. Moreover, chemical processing and discovery involves challenges which are not commonly found in RL benchmarks and therefore offer a rich space to work in. We introduce a set of highly customizable and open-source RL environments, ChemGymRL, implementing the standard Gymnasium API. ChemGymRL supports a series of interconnected virtual chemical benches where RL agents can operate and train. The paper introduces and details each of these benches using well-known chemical reactions as illustrative examples, and trains a set of standard RL algorithms in each of these benches. Finally, discussion and comparison of the performances of several standard RL methods are provided in addition to a list of directions for future work as a vision for the further development and usage of ChemGymRL.

Demonstrating ChemGymRL: An Interactive Framework for Reinforcement Learning for Digital Chemistry

In

NeurIPS 2023 AI for Accelerated Materials Discovery (AI4Mat) Workshop.

New Orleans, USA.

Dec,

2023.

This tutorial describes a simulated laboratory for making use of reinforcement learning (RL) for chemical discovery. A key advantage of the simulated environment is that it enables RL agents to be trained safely and efficiently. In addition, it offer an excellent test-bed for RL in general, with challenges which are uncommon in existing RL benchmarks. The simulated laboratory, denoted ChemGymRL, is open-source, implemented according to the standard Gymnasium API, and is highly customizable. It supports a series of interconnected virtual chemical benches where RL agents can operate and train. Within this tutorial introduce the environment, demonstrate how to train off-the-shelf RL algorithms on the benches, and how to modify the benches by adding additional reactions and other capabilities. In addition, we discuss future directions for ChemGymRL benches and RL for laboratory automation and the discovery of novel synthesis pathways. The software, documentation and tutorials are available here: https://www.chemgymrl.com

ChemGymRL: An Interactive Framework for Reinforcement Learning for Digital Chemistry

Chris Beeler,

Sriram Ganapathi Subramanian,

Kyle Sprague,

Nouha Chatti,

Colin Bellinger,

Mitchell Shahen,

Nicholas Paquin,

Mark Baula,

Amanuel Dawit,

Zihan Yang,

Xinkai Li,

Mark Crowley,

and Isaac Tamblyn.

In

ICML 2023 Synergy of Scientific and Machine Learning Modeling (SynS&ML) Workshop.

Jul,

2023.

This paper provides a simulated laboratory for making use of Reinforcement Learning (RL) for chemical discovery. Since RL is fairly data intensive, training agents ‘on-the-fly’ by taking actions in the real world is infeasible and possibly dangerous. Moreover, chemical processing and discovery involves challenges which are not commonly found in RL benchmarks and therefore offer a rich space to work in. We introduce a set of highly customizable and open-source RL environments, ChemGymRL, based on the standard Open AI Gym template. ChemGymRL supports a series of interconnected virtual chemical benches where RL agents can operate and train. The paper introduces and details each of these benches using well-known chemical reactions as illustrative examples, and trains a set of standard RL algorithms in each of these benches. Finally, discussion and comparison of the performances of several standard RL methods are provided in addition to a list of directions for future work as a vision for the further development and usage of ChemGymRL.

Multi-Agent Advisor Q-Learning

In

International Joint Conference on Artificial Intelligence (IJCAI) : Journal Track.

Macao, China.

Aug,

2023.

In the last decade, there have been significant advances in multi-agent reinforcement learn- ing (MARL) but there are still numerous challenges, such as high sample complexity and slow convergence to stable policies, that need to be overcome before wide-spread deployment is possi- ble. However, many real-world environments already, in practice, deploy sub-optimal or heuristic approaches for generating policies. An interesting question that arises is how to best use such approaches as advisors to help improve reinforcement learning in multi-agent domains. In this paper, we provide a principled framework for incorporating action recommendations from online sub- optimal advisors in multi-agent settings. We describe the problem of ADvising Multiple Intelligent Reinforcement Agents (ADMIRAL) in nonrestrictive general-sum stochastic game environments and present two novel Q-learning based algorithms: ADMIRAL - Decision Making (ADMIRAL-DM) and ADMIRAL - Advisor Evaluation (ADMIRAL-AE), which allow us to improve learning by appropriately incorporating advice from an advisor (ADMIRAL-DM), and evaluate the effectiveness of an advisor (ADMIRAL-AE). We analyze the algorithms theoretically and provide fixed point guarantees regarding their learning in general-sum stochastic games. Furthermore, extensive experi- ments illustrate that these algorithms: can be used in a variety of environments, have performances that compare favourably to other related baselines, can scale to large state-action spaces, and are robust to poor advice from advisors.

Dynamic Observation Policies in Observation Cost-Sensitive Reinforcement Learning

In

Workshop on Advancing Neural Network Training: Computational Efficiency, Scalability, and Resource Optimization (WANT@NeurIPS 2023).

New Orleans, USA.

2023.

Reinforcement learning (RL) has been shown to learn sophisticated control policies for complex tasks including games, robotics, heating and cooling systems and text generation. The action-perception cycle in RL, however, generally assumes that a measurement of the state of the environment is available at each time step without a cost. In applications such as materials design, deep-sea and planetary robot exploration and medicine, however, there can be a high cost associated with measuring, or even approximating, the state of the environment. In this paper, we survey the recently growing literature that adopts the perspective that an RL agent might not need, or even want, a costly measurement at each time step. Within this context, we propose the Deep Dynamic Multi-Step Observationless Agent (DMSOA), contrast it with the literature and empirically evaluate it on OpenAI gym and Atari Pong environments. Our results, show that DMSOA learns a better policy with fewer decision steps and measurements than the considered alternative from the literature.

Balancing Information with Observation Costs in Deep Reinforcement Learning

In

Canadian Conference on Artificial Intelligence.

Canadian Artificial Intelligence Association (CAIAC),

Toronto, Ontario, Canada.

May,

2022.

The use of Reinforcement Learning (RL) in scientific applications, such

as materials design and automated chemistry, is increasing. A major

challenge, however, lies in fact that measuring the state of the system

is often costly and time consuming in scientific applications, whereas

policy learning with RL requires a measurement after each time step. In

this work, we make the measurement costs explicit in the form of a

costed reward and propose the active-measure with costs framework that

enables off-the-shelf deep RL algorithms to learn a policy for both

selecting actions and determining whether or not to measure the state of

the system at each time step. In this way, the agents learn to balance

the need for information with the cost of information. Our results show

that when trained under this regime, the Dueling DQN and PPO agents can

learn optimal action policies whilst making up to 50% fewer state

measurements, and recurrent neural networks can produce a greater than

50% reduction in measurements. We postulate the these reduction can

help to lower the barrier to applying RL to real-world scientific

applications.

Multi-Agent Advisor Q-Learning

Journal of Artificial Intelligence Research (JAIR).

74,

May,

2022.

In the last decade, there have been significant advances in multi-agent reinforcement learning (MARL) but there are still numerous challenges, such as high sample complexity and slow convergence to stable policies, that need to be overcome before wide-spread deployment is possible. However, many real-world environments already, in practice, deploy sub-optimal or heuristic approaches for generating policies. An interesting question that arises is how to best use such approaches as advisors to help improve reinforcement learning in multi-agent domains. In this paper, we provide a principled framework for incorporating action recommendations from online sub-optimal advisors in multi-agent settings. We describe the problem of ADvising Multiple Intelligent Reinforcement Agents (ADMIRAL) in nonrestrictive general-sum stochastic game environments and present two novel Q-learning based algorithms: ADMIRAL - Decision Making (ADMIRAL-DM) and ADMIRAL - Advisor Evaluation (ADMIRAL-AE), which allow us to improve learning by appropriately incorporating advice from an advisor (ADMIRAL-DM), and evaluate the effectiveness of an advisor (ADMIRAL-AE). We analyze the algorithms theoretically and provide fixed point guarantees regarding their learning in general-sum stochastic games. Furthermore, extensive experi- ments illustrate that these algorithms: can be used in a variety of environments, have performances that compare favourably to other related baselines, can scale to large state-action spaces, and are robust to poor advice from advisors.

Scientific Discovery and the Cost of Measurement – Balancing Information and Cost in Reinforcement Learning

In

1st Annual AAAI Workshop on AI to Accelerate Science and Engineering (AI2ASE).

Feb,

2022.

The use of reinforcement learning (RL) in scientific applications, such as materials design and automated chemistry, is increasing. A major challenge, however, lies in fact that measuring the state of the system is often costly and time consuming in scientific applications, whereas policy learning with RL requires a measurement after each time step. In this work, we make the measurement costs explicit in the form of a costed reward and propose a framework that enables off-the-shelf deep RL algorithms to learn a policy for both selecting actions and determining whether or not to measure the current state of the system at each time step. In this way, the agents learn to balance the need for information with the cost of information. Our results show that when trained under this regime, the Dueling DQN and PPO agents can learn optimal action policies whilst making up to 50% fewer state measurements, and recurrent neural networks can produce a greater than 50% reduction in measurements. We postulate the these reduction can help to lower the barrier to applying RL to real-world scientific applications.

Decentralized Mean Field Games

In

Proceedings of the AAAI Conference on Artificial Intelligence (AAAI-2022).

Virtual.

Feb,

2022.

Multiagent reinforcement learning algorithms have not been widely adopted in large scale environments with many agents as they often scale poorly with the number of agents. Using mean field theory to aggregate agents has been proposed as a solution to this problem. However, almost all previous methods in this area make a strong assumption of a centralized system where all the agents in the environment learn the same policy and are effectively indistinguishable from each other. In this paper, we relax this assumption about indistinguishable agents and propose a new mean field system known as Decentralized Mean Field Games, where each agent can be quite different from others. All agents learn independent policies in a decentralized fashion, based on their local observations. We define a theoretical solution concept for this system and provide a fixed point guarantee for a Q-learning based algorithm in this system. A practical consequence of our approach is that we can address a ‘chicken-and-egg’ problem in empirical mean field reinforcement learning algorithms. Further, we provide Q-learning and actor-critic algorithms that use the decentralized mean field learning approach and give stronger performances compared to common baselines in this area. In our setting, agents do not need to be clones of each other and learn in a fully decentralized fashion. Hence, for the first time, we show the application of mean field learning methods in fully competitive environments, large-scale continuous action space environments, and other environments with heterogeneous agents. Importantly, we also apply the mean field method in a ride-sharing problem using a real-world dataset. We propose a decentralized solution to this problem, which is more practical than existing centralized training methods.

Investigation of Independent Reinforcement Learning Algorithms in Multi-Agent Environments

Frontiers in Artificial Intelligence.

Sep,

2022.

Independent reinforcement learning algorithms have no theoretical guarantees for finding the best policy in multi-agent settings. However, in practice, prior works have reported good performance with independent algorithms in some domains and bad performance in others. Moreover, a comprehensive study of the strengths and weaknesses of independent algorithms is lacking in the literature. In this paper, we carry out an empirical comparison of the performance of independent algorithms on seven PettingZoo environments that span the three main categories of multi-agent environments, i.e., cooperative, competitive, and mixed. For the cooperative setting, we show that independent algorithms can perform on par with multi-agent algorithms in fully-observable environments, while adding recurrence improves the learning of independent algorithms in partially-observable environments. In the competitive setting, independent algorithms can perform on par or better than multi-agent algorithms, even in more challenging environments. We also show that agents trained via independent algorithms learn to perform well individually, but fail to learn to cooperate with allies and compete with enemies in mixed environments.

Investigation of Independent Reinforcement Learning Algorithms in Multi-Agent Environments

In

NeurIPS 2021 Deep Reinforcement Learning Workshop.

Dec,

2021.

Independent reinforcement learning algorithms have no theoretical guarantees for finding the best policy in multi-agent settings. However, in practice, prior works have reported good performance with independent algorithms in some domains and bad performance in others. Moreover, a comprehensive study of the strengths and weaknesses of independent algorithms is lacking in the literature. In this paper, we carry out an empirical comparison of the performance of independent algorithms on four PettingZoo environments that span the three main categories of multi-agent environments, i.e., cooperative, competitive, and mixed. We show that in fully-observable environments, independent algorithms can perform on par with multi-agent algorithms in cooperative and competitive settings. For the mixed environments, we show that agents trained via independent algorithms learn to perform well individually, but fail to learn to cooperate with allies and compete with enemies. We also show that adding recurrence improves the learning of independent algorithms in cooperative partially observable environments.

Partially Observable Mean Field Reinforcement Learning

In

Proceedings of the 20th International Conference on Autonomous Agents and MultiAgent Systems (AAMAS).

International Foundation for Autonomous Agents and Multiagent Systems,

London, United Kingdom.

May,

2021.

Traditional multi-agent reinforcement learning algorithms are not scalable to environments with more than a few agents, since these algorithms are exponential in the number of agents. Recent research has introduced successful methods to scale multi-agent reinforcement learning algorithms to many agent scenarios using mean field theory. Previous work in this field assumes that an agent has access to exact cumulative metrics regarding the mean field behaviour of the system, which it can then use to take its actions. In this paper, we relax this assumption and maintain a distribution to model the uncertainty regarding the mean field of the system. We consider two different settings for this problem. In the first setting, only agents in a fixed neighbourhood are visible, while in the second setting, the visibility of agents is determined at random based on distances. For each of these settings, we introduce a Q-learning based algorithm that can learn effectively. We prove that this Q-learning estimate stays very close to the Nash Q-value (under a common set of assumptions) for the first setting. We also empirically show our algorithms outperform multiple baselines in three different games in the MAgents framework, which supports large environments with many agents learning simultaneously to achieve possibly distinct goals.

Active Measure Reinforcement Learning for Observation Cost Minimization: A framework for minimizing measurement costs in reinforcement learning

In

Canadian Conference on Artificial Intelligence.

Springer,

2021.

Markov Decision Processes (MDP) with explicit measurement cost are a class of en- vironments in which the agent learns to maximize the costed return. Here, we define the costed return as the discounted sum of rewards minus the sum of the explicit cost of measuring the next state. The RL agent can freely explore the relationship between actions and rewards but is charged each time it measures the next state. Thus, an op- timal agent must learn a policy without making a large number of measurements. We propose the active measure RL framework (Amrl) as a solution to this novel class of problem, and contrast it with standard reinforcement learning under full observability and planning under partially observability. We demonstrate that Amrl-Q agents learn to shift from a reliance on costly measurements to exploiting a learned transition model in order to reduce the number of real-world measurements and achieve a higher costed return. Our results demonstrate the superiority of Amrl-Q over standard RL methods, Q-learning and Dyna-Q, and POMCP for planning under a POMDP in environments with explicit measurement costs.

Deep Multi Agent Reinforcement Learning for Autonomous Driving

In

Canadian Conference on Artificial Intelligence.

May,

2020.

Learning Multi-Agent Communication with Reinforcement Learning

In

Conference on Reinforcement Learning and Decision Making (RLDM-19).

Montreal, Canada.

2019.

Training Cooperative Agents for Multi-Agent Reinforcement Learning

In

Proc. of the 18th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2019).

Montreal, Canada.

2019.

A Complementary Approach to Improve WildFire Prediction Systems.

In

Neural Information Processing Systems (AI for social good workshop).

NeurIPS.

2018.

Learning Forest Wildfire Dynamics from Satellite Images Using Reinforcement Learning

In

Conference on Reinforcement Learning and Decision Making.

Ann Arbor, MI, USA..

2017.

Policy Gradient Optimization Using Equilibrium Policies for Spatial Planning Domains

Mark Crowley.

In

13th INFORMS Computing Society Conference.

Santa Fe, NM, United States.

2013.

Equilibrium Policy Gradients for Spatiotemporal Planning

Mark Crowley.

UBC Library,

Vancouver, BC, Canada..

2011.

In spatiotemporal planning, agents choose actions at multiple locations in space over some planning horizon to maximize their utility and satisfy various constraints. In forestry planning, for example, the problem is to choose actions for thousands of locations in the forest each year. The actions at each location could include harvesting trees, treating trees against disease and pests, or doing nothing. A utility model could place value on sale of forest products, ecosystem sustainability or employment levels, and could incorporate legal and logistical constraints such as avoiding large contiguous areas of clearcutting and managing road access. Planning requires a model of the dynamics. Existing simulators developed by forestry researchers can provide detailed models of the dynamics of a forest over time, but these simulators are often not designed for use in automated planning. This thesis presents spatiotemoral planning in terms of factored Markov decision processes. A policy gradient planning algorithm optimizes a stochastic spatial policy using existing simulators for dynamics. When a planning problem includes spatial interaction between locations, deciding on an action to carry out at one location requires considering the actions performed at other locations. This spatial interdependence is common in forestry and other environmental planning problems and makes policy representation and planning challenging. We define a spatial policy in terms of local policies defined as distributions over actions at one location conditioned upon actions at other locations. A policy gradient planning algorithm using this spatial policy is presented which uses Markov Chain Monte Carlo simulation to sample the landscape policy, estimate its gradient and use this gradient to guide policy improvement. Evaluation is carried out on a forestry planning problem with 1880 locations using a variety of value models and constraints. The distribution over joint actions at all locations can be seen as the equilibrium of a cyclic causal model. This equilibrium semantics is compared to Structural Equation Models. We also define an algorithm for approximating the equilibrium distribution for cyclic causal networks which exploits graphical structure and analyse when the algorithm is exact.